Algorithms & Society

My research studies foundations of machine learning with a focus on social questions. I am broadly working towards developing theoretical as well as practical tools to support safe, reliable and trustworthy machine learning with a positive impact on society. This encompasses technical challenges around interactive machine learning, optimization in dynamic environments, and resource-efficient learning, as well as interdisciplinary questions on understanding social dynamics around algorithms, quantifying their impact on digital economies, and developing tools to support the responsible use of machine learning models in social science research.

Featured Research Projects

Evaluating LLMs as risk scores

A Python library to systematically translate the ACS survey data into natural text prompts to benchmark LLM outputs against US population statistics. Individual prediction tasks are non-realizable and provide insights into model's ability to express natural uncertainty in human outcomes.

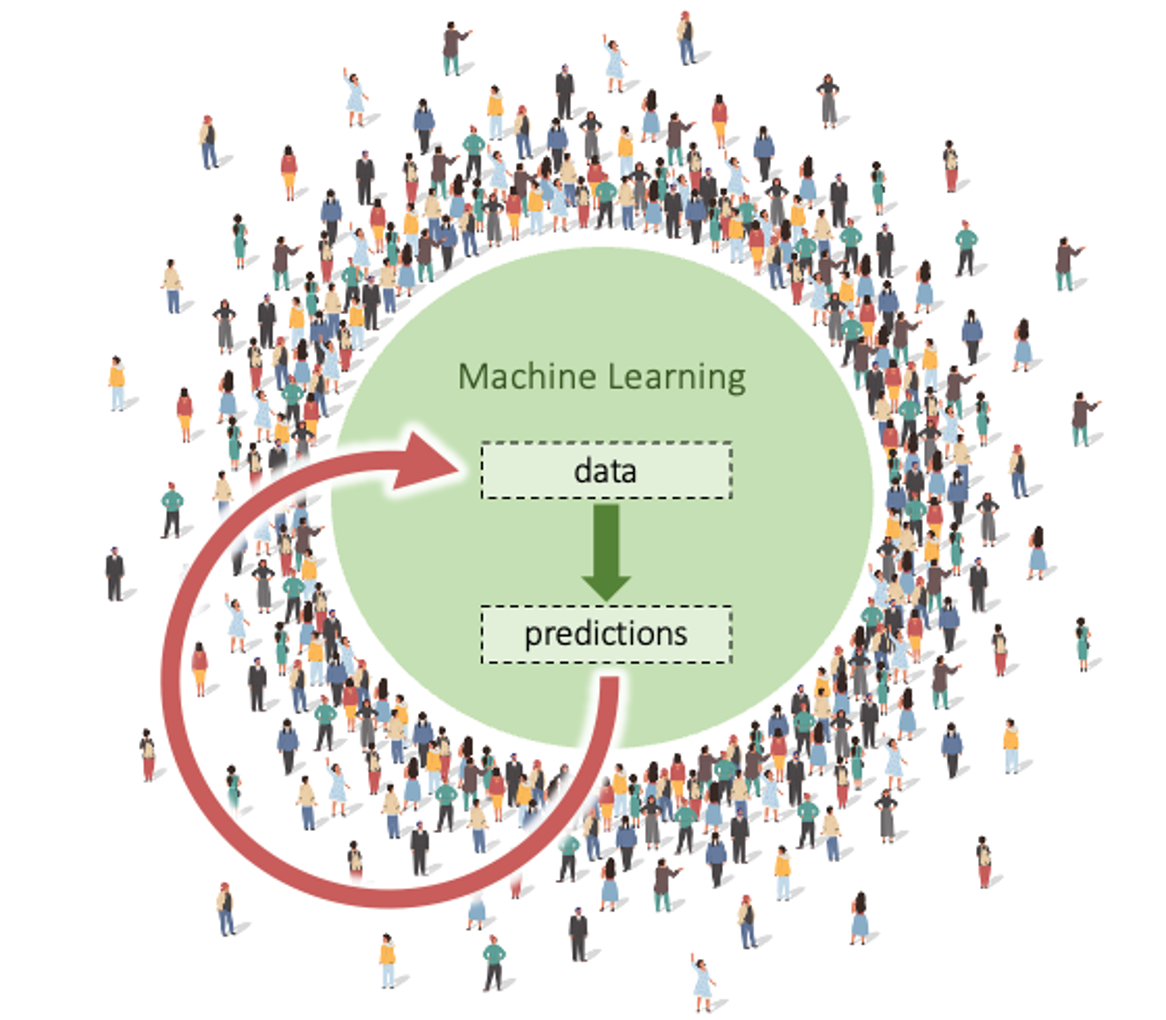

Performative Prediction

- Performative Prediction. ICML 2020.

- Survey paper. Arxiv 2023.

- Tutorial. UAI 2024.

Performative power of online search

A Chrome extension that measure how content arrangements impact user click behavior through randomized experiments.

- Project website at https://powermeter.is.tue.mpg.de/

- Experimental insights to be published at NeurIPS 2024

Algorithmic collective action

- Theoretical framework. ICML 2022.

- Application to sequential recommender systems. NeurIPS 2024.

- A collection of documented use-cases. Github.

IBM Snap Machine Learning

Snap ML is a library that provides resource efficient and

fast

training

of

popular machine learning models on modern computing systems.

>400k downloads on PyPi

https://www.zurich.ibm.com/snapml/

Publications and Preprints

Peer-reviewed Workshop Contributions

Patents

US20210264320A1 - T. Parnell, A. Anghel, N. Ioannou, N. Papandreou, C. Mendler-Dünner, D.

Sarigiannis, H. Pozidis.

US11562270B2 - M. Kaufmann, T. Parnell, A. Kourtis, C. Mendler-Dünner.

US11573803B2 - N. Ioannou, C. Dünner, T. Parnell.

US11295236B2 - C. Dünner, T. Parnell, H. Pozidis.

US11315035B2 - T. Parnell, C. Dünner, H. Pozidis, D. Sarigiannis

US11461694B2 - T. Parnell, C. Dünner, D. Sarigiannis, H. Pozidis.

US11301776B2 - C. Dünner, T. Parnell, H. Pozidis.

US10147103B2 - C. Dünner, T. Parnell, H. Pozidis, V. Vasileiadis, M. Vlachos.

US10839255B2 - K. Atasu, C. Dünner, T. Mittelholzer, T. Parnell, H. Pozidis, M. Vlachos.